ZBra-music Dataset Description

Stimuli

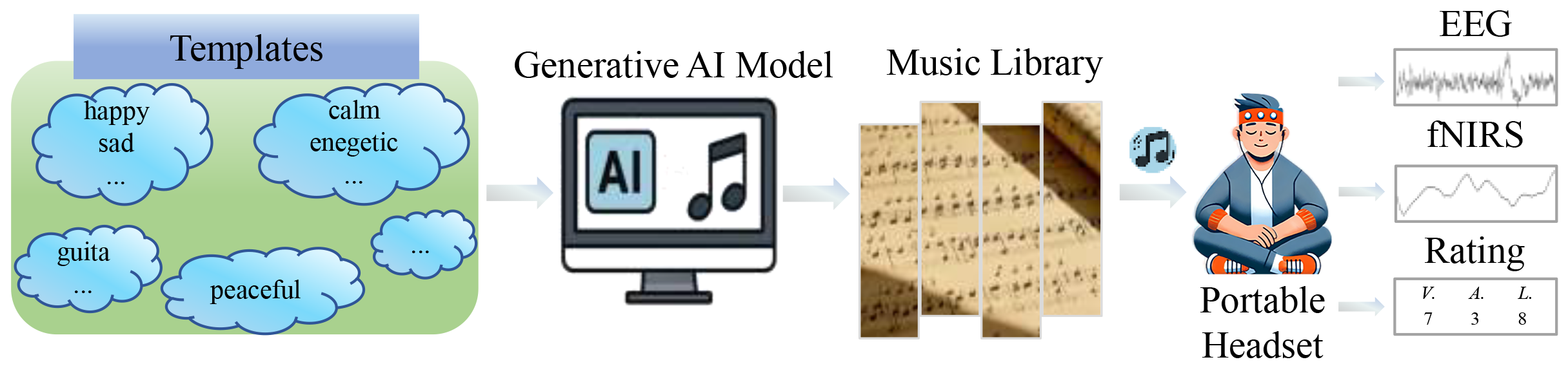

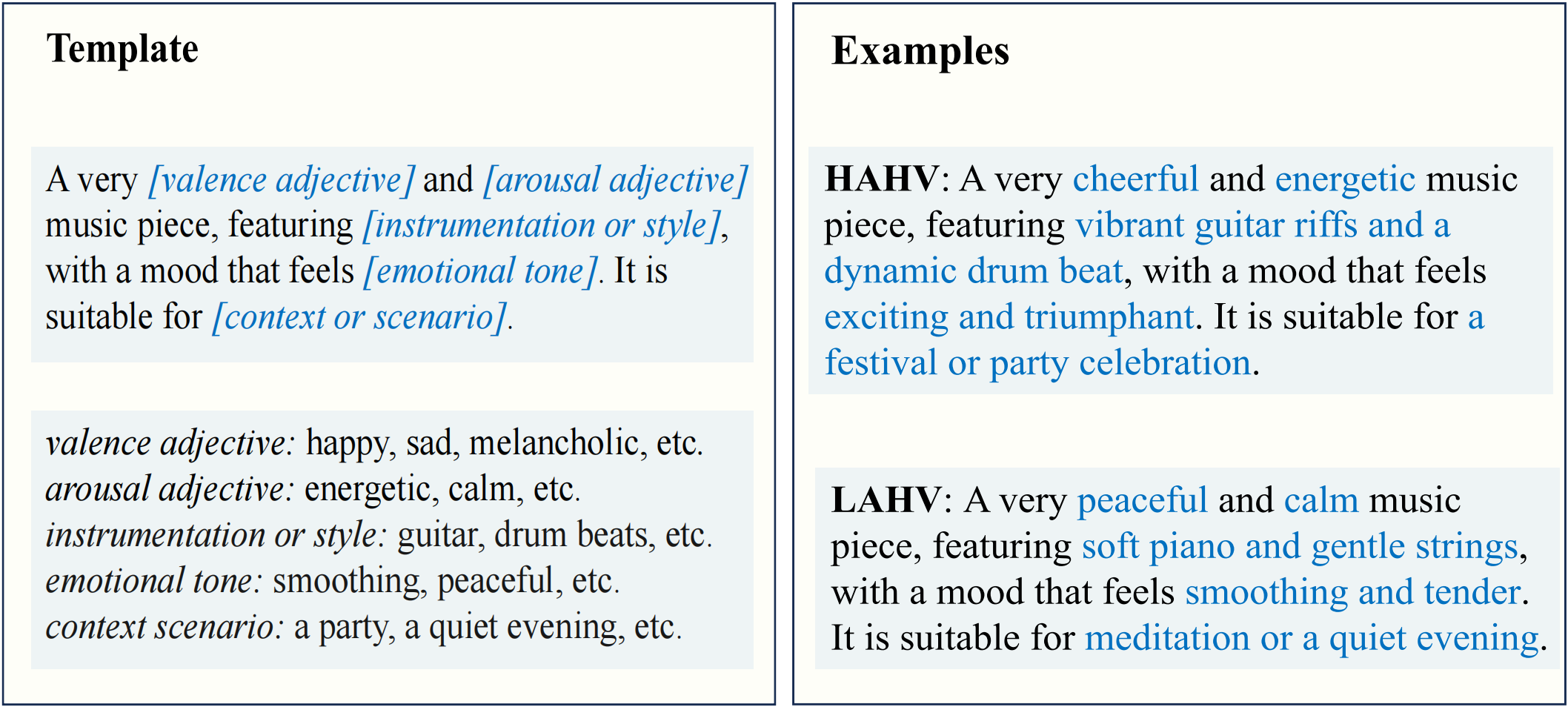

A novel framework, named MEEtBrain, was proposed to collect multi-modal brain signals induced by AI-generated music for the analysis of human affective states, shown in figure above. First, a music library was constructed as emotion stimuli, where music clips are automatically generated on a large scale by a generative AI model (MusicGen). For the automatic generation, a set of prompts was designed following the Russell's Valence-Arousal circumplex and considering personalized interests in music. All the obtained music clips have been evaluated by recruiting volunteers to rate, ensuring the matching between each music clip and its labeled emotion state.

There is a total of 101 music clips, each lasting 30 seconds. The music clips are categorized into four emotional states based on valence and arousal and the categories are kept roughly balanced.

Data Collection

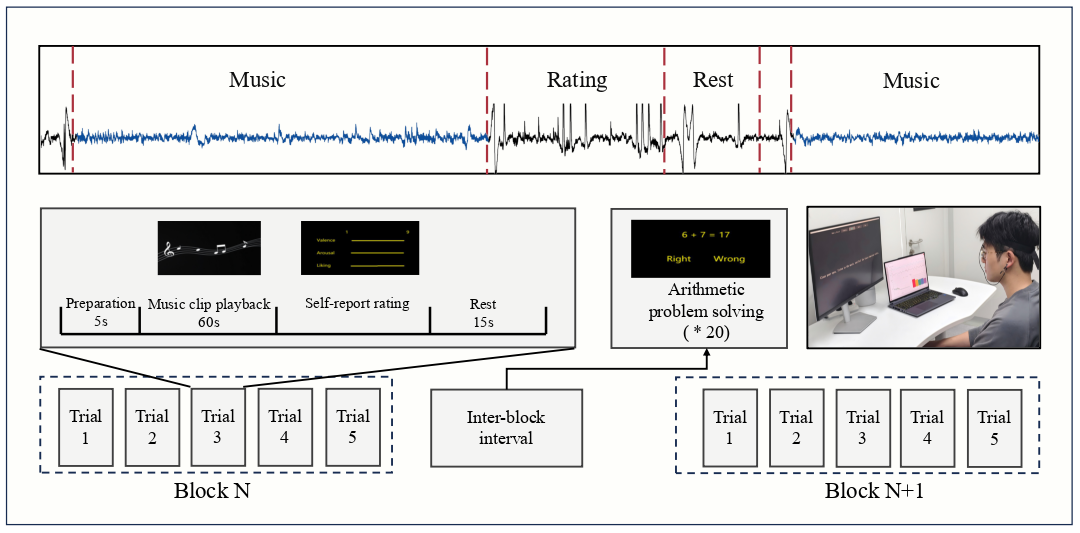

The whole data collection process is shown in the figure below. The experimental protocol required each participant to complete 40 trials, with each trial consisting of:

- a 5-second preparation period,

- a 60-second music listening phase,

- a 25-second rating period and rest interval.

Subjects

44 Chinese subjects (21 males and 23 females) aged 22-38 years participated in the experiment. To protect user privacy, each participant was anonymized and assigned a unique identifer (sub_1, sub_2, ..., sub_44).

Notice: Due to technical reasons, only half of the data of the three subjects were saved.

Data Preprocess

1) EEG

We only performed basic preprocessing for EEG data in the public dataset, including: resample to 250 Hz, bandpass filter (0.1 - 45 Hz). Therefore, the EEG data of one trial is a 2 x 15000 array, where the first row is Fp1 and the second row is Fp2 referenced to the left earlobe A1 according to the international 10-20 system. We did not apply a 50 Hz notch filter.2) fNIRS

The fNIRS data was used to calculate the oxyhemoglobin (HbO), deoxyhemoglobin (HbR) and total hemoglobin concentration changes using the modified Beer-Lambert law. And then baseline correction and bandpass filtering (0.01 - 0.1 Hz) were applied to the HbO, HbR and HbT data. We also applied a 0.5-4 Hz band-pass filter to optical density changes to obtain the signals similar to the photoplethysmogram (PPG) signal, which contains heart rate information. The details of fNIRS processing can be found in 'fnirs_process.py'Dataset Summary

ZBra-music dataset contains 44 subjects' data (preprocessed EEG, fNIRS, PPG), which are recorded while listening to AI-generated music clips. The dataset is organized in a directory structure as follows:

1) 'music' direactory:

There are four subdirectories, each corresponding to one of the four emotional states (HAHV, HALV, LAHV, LALV).

The music ratings information is stored in a JSON file named 'ratings.json' in this directory, containing the mean rating scores for each music clip.

2) 'eeg' / 'fnirs' / 'ppg' directory:

There are 44 subdirectories, each containing the EEG, fNIRS, and PPG data for an individual subject. Within each subdirectory, you will find a CSV file named 'label.csv', which stores the rating scores for every trial.

3) 'sub_info.csv':

This file contains the information of all subjects, including 'ID', 'GENDER' and 'AGE'.

Notice: 44 subjects' data are stored in two directories, 'sub_1_20' and 'sub_21_44', due to the large size of the dataset.

Download

Download ZBra-music DatasetReference

If you feel that the dataset is helpful for your study, please add the following reference to your publications.

[1] Sha Zhao, Song Yi, Yangxuan Zhou, Jiadong Pan, Jiquan Wang, Jie Xia, Shijian Li, Shurong Dong, and Gang Pan. Wearable Music2Emotion : Assessing Emotions Induced by AI-Generated Music through Portable EEG-fNIRS Fusion. In Proceedings of the 33rd ACM International Conference on Multimedia (MM '25), October 27-31, 2025, Dublin, Ireland. https://doi.org/10.1145/3746027.3755270